What Makes Meta AI's Llama 3 So Great

Meta released Llama 3 last week, a new series of AI models, and they claim that it is among the best open models available. For justifiable reasons, Llama 3 is trending as it is well on the path to be one of the leading AI models with its impressive performance so far.

I have been using Meta AI every day since its release, and I will say something about it right off the bat: I truly am impressed with a model that is free. And on top of that, Meta shares the experience with some of its other products for people to find the AI to interact with and use for their day-to-day needs, like planning a dinner and preparing dishes from what's available in the fridge.

Firstly, the user interface (UI) is actually pretty. It has a simple, minimalist design, yet it's fresh and moderately stylish, with interesting, subtle animations as you interact with it. That's way more than I get with ChatGPT-3.5, and I like that.

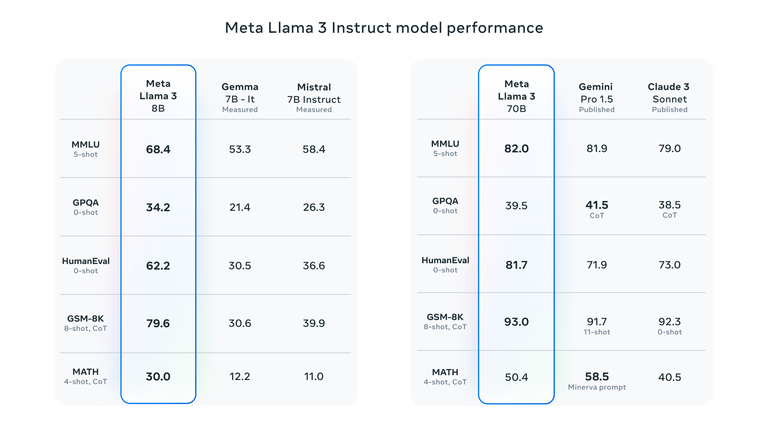

Llama 3 Models and Benchmarks

There are two models in the Llama 3 release, Llama 3 8B and Llama 3 70B, with eight billion parameters and seventy parameters, respectively. And their benchmarks are surprising, with the models competing with and even surpassing some strong already-existing AI models like Mistral 7B Instruct, Claude 3 Sonnet, and Google's Gemini Pro 1.5.

The benchmarks show that Llama 3 is a state-of-the-art language model that is capable of a wide range of use cases. And Meta is still training its model, meaning that they will only get much better from now on, with even more models—including one with 400B parameters—coming soon.

The models are pre-trained and instruction-fine-tuned, meaning that they have been trained on a vast amount of data to be able to understand patterns and greatly improve their ability to generate coherent responses.

Parameters are basically internal model variables used to process and generate text. The more parameters, essentially, the higher the ability to understand patterns and generate more nuanced text.

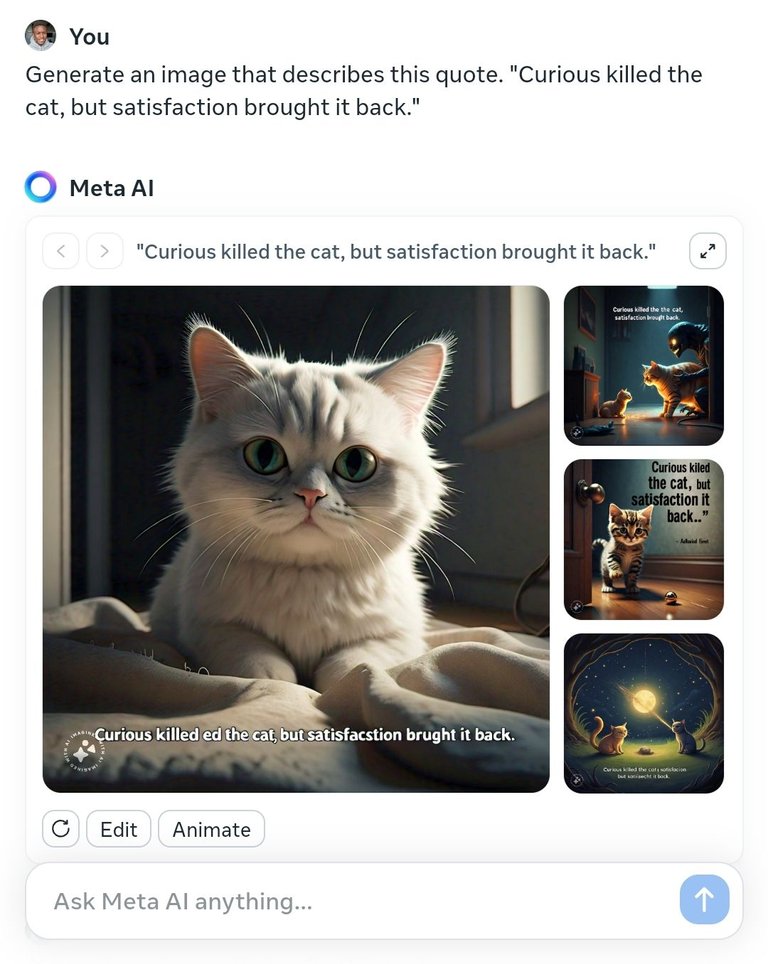

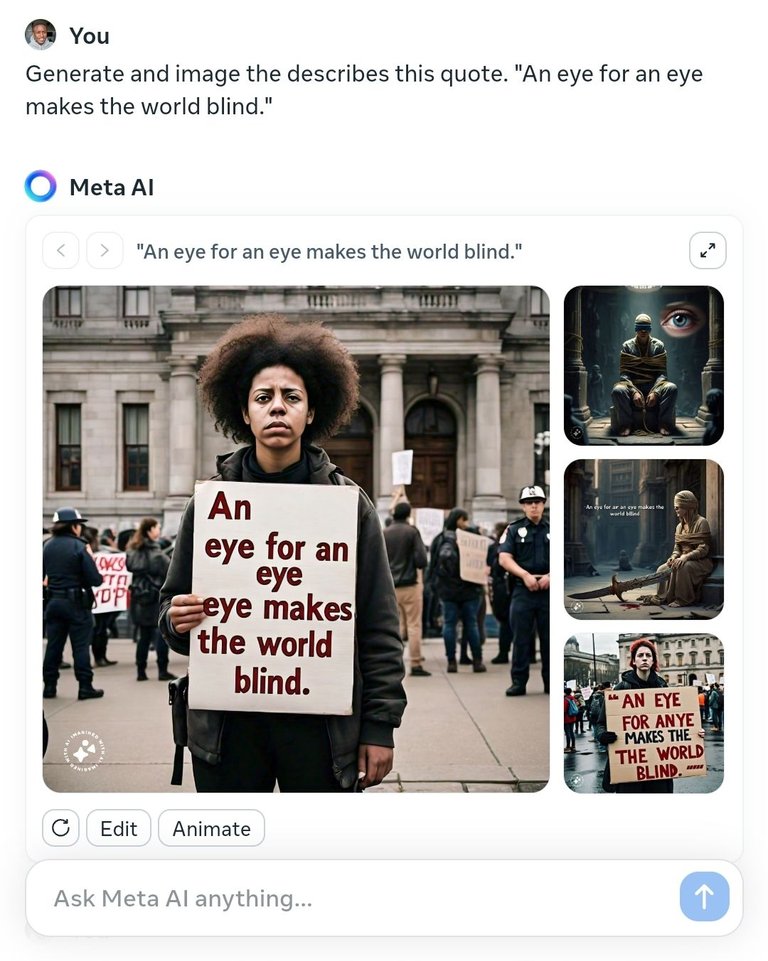

It comes with free image-generation capabilities that perform very well and strongly compete with DALL-E. It does well to understand the prompt and imagine something that, to me, can be acceptable. And it can even put text where necessary in the images it generates. Although the "text in images" thing hasn't been a perfect experience for me, it can be managed with the right instructions.

Comparison with ChatGPT-3.5

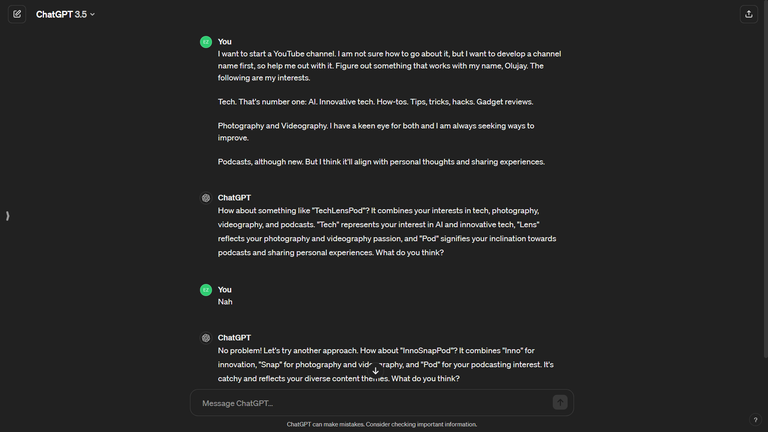

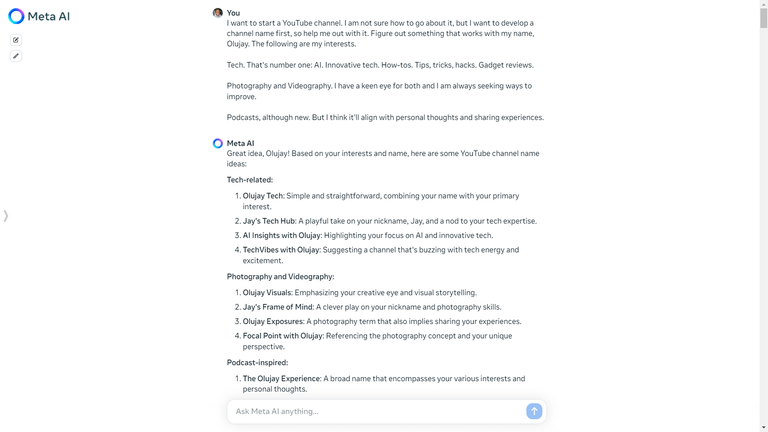

Having spent a lot of time using ChatGPT-3.5 for a lot of different cases, my experience is totally different and even way better using Meta AI running on Llama 3. And using the same prompt for both AI chatbots can give that away quite easily. For example, I am developing a niche for myself by branching out into YouTube, and so I turned to AI for ideas and help.

|  |

When I used ChatGPT-3.5, what I got was honestly nothing like what I was going for. Surely, with a little bit more detail and a more a more nuanced construction of my prompt, it could have given me something better.

With Meta AI, however, I found myself going deeper and deeper in conversations with it as I was totally enjoying and getting inspired by what it was delivering—down to creating logos and channel artworks. To me, Llama 3 is more intuitive and "gets the memo" better.

Meta AI also has access to real-time information from the web, making it efficient for restaurant recommendations, planning weekend getaways, and searching for concerts. And it is available on Facebook, Instagram, WhatsApp, and Messenger. So accessing it is actually way easier than any other chatbots out there. You would only need to log in so it can save your conversations. And, yes, you can use Meta Ai without even being logged in.

|  |

Image Generation Impressions

The image generation feature is actually fast. In a matter of seconds, it generates images that are sharp and of high quality. And it's imaginative capabilities are nothing short of impressive.

I simply ran a few quotes through it and asked it to "generate images that best describes them." To my surprise, it generate images that very represent the quotes based on context and real-life scenarios, as well include the text of those quotes in some of the images.

|  |

The images above were generated for a recent blog post of mine, 5 Quotes You Thought You Knew. If you look closely, beyond the sharp and clear details of some of the images, however, you'll see that the AI misspelt some words. Nonetheless, its performance was impressive.

Open-sourcing and future Developments

Meta say that Llama 3 is open source. And they made it so for how much development and improvement is achievable with open-sourcing products. However, it really isn't so open source to me with the constraints they placed on the model.

Llama 3's family of models may have been made available for both research and commercial applications. However, Meta forbids developers from using Llama models to train other generative models, while app developers with more than 700 million monthly users must request a special license from Meta that the company will — or won’t — grant based on its discretion. Source

Notwithstanding, what we have so for—on the consumer end—is a yet another leap in AI development and higher potential in improving our daily lives with these powerful tools available.

“Our goal in the near future is to make Llama 3 multilingual and multimodal, have longer context and continue to improve overall performance across core [large language model] capabilities such as reasoning and coding,” Meta writes in a blog post. “There’s a lot more to come.”

What do you think about Llama 3 series? You can try out Meta AI running on Llama for free on the web at meta.ai, or find it on any of the Meta social media applications.

By the way, make earnings with your content on Hive via InLeo while you truly own your account. If you're new, sign up in a few minutes by clicking here! And here's a guide on navigating.

Thumbnail image by Meta AI. Other images are properties of Meta

Interested in more?

OpenAI Refining Voice Cloning with Voice Engine

Meet the Humane AI Pin: Voice, Gesture, AI – No Screens Needed!

The Link: Bridging Minds and Machines with Neuralink's Brain Chip

Posted Using InLeo Alpha

Thanks for the update friend. It seems impressive. I'll try it out and maybe give a feedback

I'd love to know what you think.

It is really a nice A.I after I finished chatting it I realized that the world has moved I don't think we need dictionary now a days, A.l Llama is capable of giving solutions that seemed impossible

Dictionary? Man, it's been ages since I used/heard that word. The world's more sophisticated now.

I don’t know why my phone keeps logging itself anytime I tap on this post but that ghost should be careful

Anyway, I got sold at restaurant recommendation and planning weekend getaways

My getaways just got better!!!

That's strange, and funny. Lol. Is that Ecency?

Seems to me you want to plan specials with him. Go for it!

Yeah Ecency

There’s no him!

Is Ecency still acting that's way?

Yeah

On daily I use a combination of Bing Copilot, Chat GPT 3.5 and Mistral Large for certain task at work, mainly for research and some automatization with reports, AI imo is still a cool advance search engine I haven't found one advance enough and AI think this is intentional for free models, although for image generation they're awesome, will look into Llama 3 this weekend ✌️

Very power productive of you. I do similar this with GPT-3.5 and Perplexity AI as well. I don't really use Bing Copilot. I'll stick with Meta AI for now. I'd like to know what you think of Llama 3 in the future.

Thank you. 💚

Yes, it is very interesting to use and friendly too

Thanks for the comprehensive information.

Nice. Good to know you use it as well. You're welcome.

Very interesting piece out there. Great comparison with Chat GPT, but I think you should have used same prompt for both queries.

Meta's Llama has great prospects.

Thanks, man. I actually used the exact same prompt for both chatbots, and that's why I think Meta AI responds better.

Llama does have great potential.

Thank you for this fantastic post @olujay I have yet to try out Llama. This input of yours makes that decision very simple. I need to try it out ASAP.

Great explanation too. I am sure everyone will get a picture of what you are talking about. Excited about you wanting to be on YouTube too. I am also thinking something along those lines but later in the year :)

Cheers from a fellow #dreemerforlife

And what do you think of Meta AI so far, if you found the time to try it?

About the YT channel, I'm just going to jump at it and start anyway.

I have not yet got an opportunity to try out Llama. Will be checking out soon once the current work activity completes

I am interested in the images this Ai can generate. I just tried generating one using the link you provided but it's asking that I login first. I will sure do that tomorrow.

Thanks for sharing, robotic Jay

I jumped and passed this post when I first saw it in hl podcast, lolz..but dreemport said no! Face your fear.. haha 😂

Here am I , obeying the call, as a true dreemer 😍

#dreemerforlife

Oh, yes. You have to log in before you can generate images. And it makes sense so that people don't generate provoking images anonymously.

Thank you always, Nkem. 😁

You are really what is called Tech guy. The school fees that was spent on your head was well spent my dear.

Breezed in from #dreemport 🙂

What??? 🤣

Thank you, CEO Bipolarnation. I try my best.

😂🤣 You have given me title?? Thank you I accept don't mention 😂

Better. Lol