AI generated images from my custom diffusion models

Hello everyone, hope you are doing fine. I haven’t been able to post much recently as I was training and testing 2 custom AI diffusion models this last week and a half. For more info on how you can create your own AI diffusion model, take a look at this excellent tutorial by @kaliyuga.

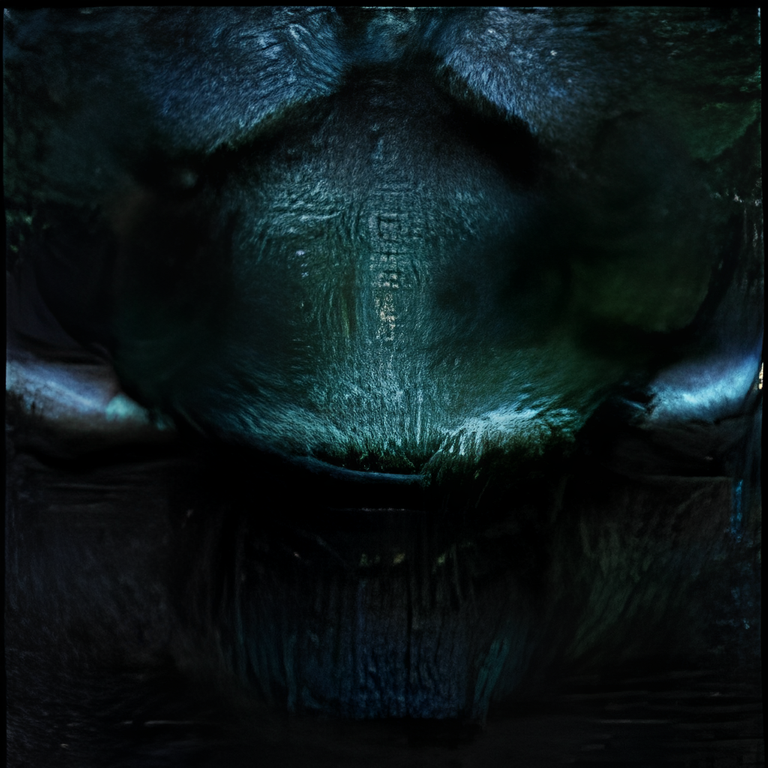

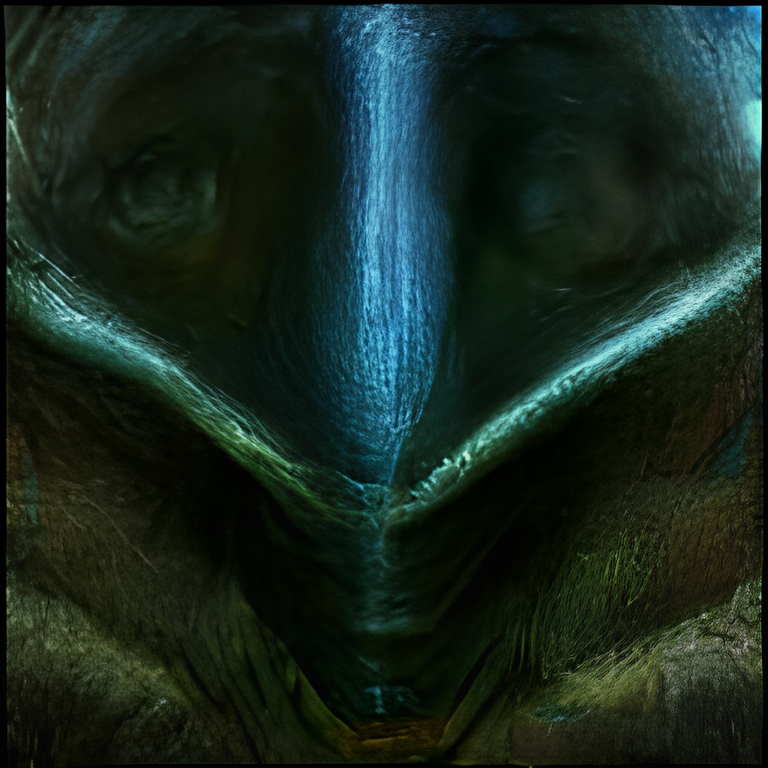

Here are some images from my first AI diffusion model:

These are all upscaled using Swinir.

KIDLIT Diffusion

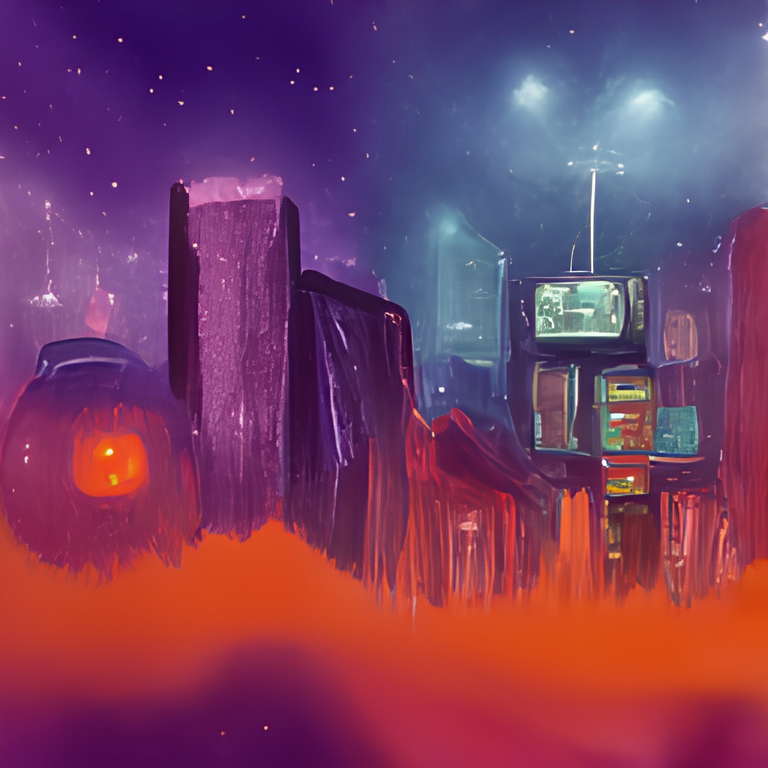

Here are some images from my 2nd model which we (my wife and I) call kidlit diffusion model. The dataset which I used for training this model was entirely from my wife's digital art which she made using Procreate. Here are some generated images:

I am absolutely in love with the images produced with the KIDLITDiffusion model!

Closing Thoughts

When are custom diffusion models needed?

I feel when you have very specific requirements, it is better to train your own model with the appropriate dataset. Kaliyuga's pixel art diffusion notebook is an excellent example.

On the other hand, if you are just starting out or your needs are more varied, better stick to the open ai diffusion models and explore first.

Some tips while training

I am certainly not an expert but these are things I noticed while training my models.

Train with the highest quality images you have and which you can afford to train on - higher quality images produce larger size training checkpoints and I think they take longer too. The upside is you can probably get away with lesser training steps.

As you can see in the images produced with my first custom model, they are not of great quality, especially after upscaling. This is because I trained the model with just 256x256 sized images. Also, if i try to generate an image with a higher resolution than that, I run into tiling issues.

On the other hand, with my second model, the KIDLIT Diffusion one, I can tell you that most of the images have not been upscaled. I rendered at 1024 x 768 too and there were no tiling issues.

I think this is because I did not resize images this time while training. Many of the images were 2500x2500 and some even higher. I left them as they were and you can see the quality of the output. What you sow is what you reap is very true here.

Also, it is better to crop your dataset images into square formats.

These are my conclusions from my experiences. I might be very wrong about some things; as I said I am not an expert. If someone notices something wrong here, please let me know in the comments.

That's all for today. Hope you found this useful and entertaining. See you in the next one!

Do you want to generate similar art with the help of AI (or maybe something entirely different)? It is quite simple to get started. You can get started here.

linktr.ee

My NFTs on NFT Showroom

My Splinterlands Referral Link

This post has been manually curated by @bhattg from Indiaunited community. Join us on our Discord Server.

Do you know that you can earn a passive income by delegating to @indiaunited. We share 100 % of the curation rewards with the delegators.

Here are some handy links for delegations: 100HP, 250HP, 500HP, 1000HP.

Read our latest announcement post to get more information.

Please contribute to the community by upvoting this comment and posts made by @indiaunited.

Thank you @bhattg and @indiaunited :)

You and Kali have been doing an excellent job!

Thank you:). She is doing much more... She is more like an AI scientist!

I am so glad to see these interests taking on a life of their own. I have a feeling we will have AI to help us out creating graphic novels and/or games without having to code as much. The more the models get trained up and as they diversify... it's only a matter of time before they start to converge in cutting corners for bigger projects.

It can already happen... Kaliyuga's pixel art diffusion can be used to generate backgrounds or even art and assets for pixel art games. She is also working on a sprite sheet diffusion model, so we can make the characters with that too!

@dbddv01 is already making comics... only a matter of time before novels come out. And people are already using the GPT3 models to generate code for pretty much any task...

Exciting times!

I was thinking of dbddv01 when i mentioned comics and i overread a comment from Kali on Twitter i think mentioning the sprite sheet diffusion model being engineered for video game purposes. The code generator is something i haven't heard about until now! These are definitely exciting times!

Check this out :)

https://twitter.com/OpenAI/status/1425153851095543811?s=20&t=F7q9UnXBWr05cV6tgUyI4w

Lovely surreal pieces being formulated AI is so interesting and such a great tool for artists. @lavista

Hey, thank you so much:)

AI is a great tool for people who are not so artistic too(in the traditional sense), like me:)

Awesome models!!! I'm so happy to see other people starting to do this :)

Thank you so much:). Like I have said before, you were an inspiration!

Hi, @lavista wow awesome, I need one like this for Horror and collages.

I don't have a current computer, but I hope someday to have one to train my models.

Excellent, congratulations

Hi @eve66 . Thank you so much:).

You don’t need a modern computer to do all this. Any device which can run a modern browser and a Google Colab subscription is sufficient. Just follow Kaliyuga's tutorial... The training is done using colab notebooks.

I will try it, Thank you

Looking forward to your results too:)

Hi @lavista, Thx for your experimental notes about the training. I may have missed the info, but how many pictures did you gather to make model ? What were your selection criteria for a picture to make part of your dataset (beside HQ size) ? Is colab pro enough ? what was the processing time ?

@Kaliyuga PAD and now your model are opening new mind bending levels a latent space exploration. This is magic.

Hi @dbddv01! I duplicated most of the images by flipping them horizontally, so I had a total of 132 images.

Regarding the selection criteria, it depends on what you want to do from the model you create. I wanted the children’s illustration / fantasy kind of look. So I choose images accordingly - basically most of my wife's work. Also, I tried to select mostly square format images. I do have some rectangular images in my dataset, but I believe the training program crops the non square images.

Colab Pro is enough, I did it with Colab Pro. My first model I trained for several days, the training steps count was 152000 when I stopped. With my second model, I started getting decent results at 22000 steps and I stopped training at 32000 steps as I liked the results already. This took only a couple of days of training- I was not training 24hrs/day. Say, I used to train for around 7-8 hours per day for 2.5 days, that's around 24 hours of training for the second model to get good results.

To be honest, with my first model, at around 10000 steps, I wanted to see if it will produce something. I ran it and this was the image produced:

It indeed felt like magic and I was so satisfied and happy!:).

Looking forward to what you come up with now!

Thanks a lot for those details. It's looks technically quite affordable, I will have to think of it now. This is exciting !

I'm just butting in to say that more images are probably better! I'd recommend 1000 or more so that you don't overtrain :)

Definitely. More the better:)