The Pace Of AI

How fast is AI going?

Many have heard about Moore's Law. This is the observation that the number of transistors on a wafer double roughly every 2 years.

This was observed by Gordon Moore in the 1960s. Experts cite this as the reason for the major advancements in what is known as the semiconductor era (since the 1980s).

It is easy to see the progress made over the last 40 years. Society changed a great deal. We saw the period of 1980-2000 introduce a variety of devices and technologies. Many of these were based upon the semiconductor.

The 20 years period, after that, from the user perspective was rather slow. We saw the smartphone, which was really a convergence of technologies. There was also streaming, possible due to advancements in our communication systems.

Based upon my research, there are roughly 20 years cycles of societal explosions relating to technology. That means we are in another period lasting until around 2040.

Let us look at what is driving the next wave.

The Pace of AI

AI is everywhere.

People are wondering if it is in a hype cycle. Many speculate that it is going to pop at any time.

This is not the case. While there could be some companies that are insanely priced, we will detail the exponentials to explain what is taking place.

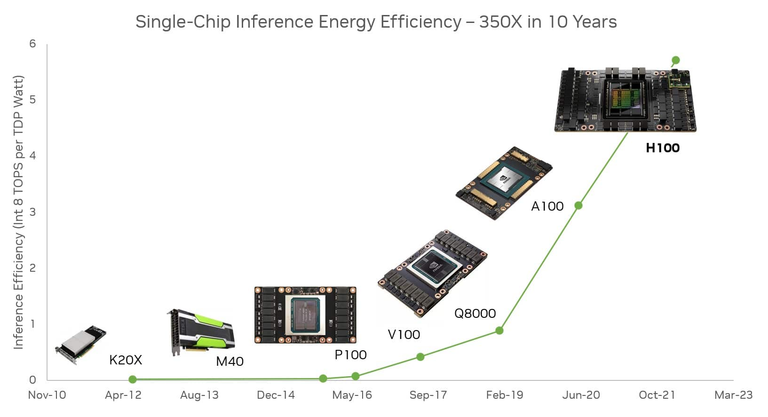

Here is a chart of NVIDIA GPUs. This goes through the Hopper series. It is an exponential chart showing the massive progress.

Before commenting on that chart, it is important to state that Moore's Law provides roughly a 100x over a decade. This means that computing power is 100 times what it was a decade before.

As we can see, Nvidia was able to crush that with its GPUs. From 2012-2021, there was a 350x in the efficiency of the chips. This is a lot more output per chip. It is also why advancement in the AI world really accelerated over the last decade. Again, this was not so much at the user end, until ChatGPT built an interface.

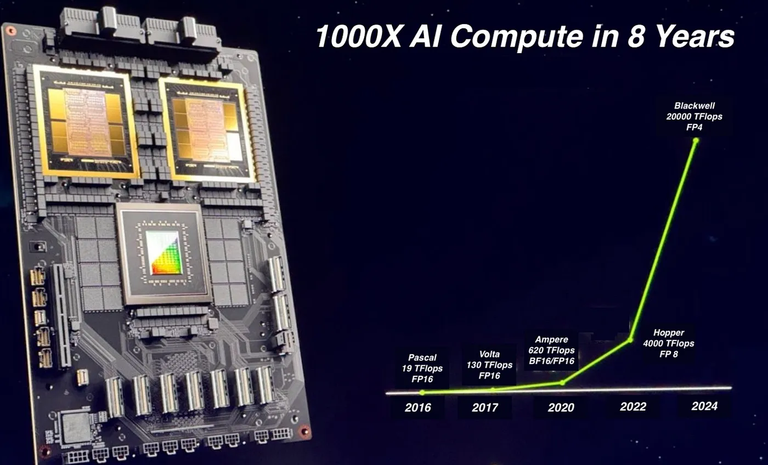

That said, when we continue the exponential chart, we get the next one. Here is the same thing, but shifted to move forward a bit, including adding the Blackwell line.

Here we start from 2016 and we see a 1000x in 8 years. Instead of a 350x in 10, we almost tripled that in two years less.

Compute is one of the key components to AI. We can see the push higher in the last couple years.

All of this explains why we are suddenly seeing massive jumps in the capabilities of the models that are trained.

There is on caveat: Blackwell, as I am writing his, has not started shipping.

That means all the processing of data being done is still, at best, using the Hopper series. We have not seen the impact of the next computing beast to emerge.

Models Get Cheaper To Train

Over time, models get cheaper to train, at least older ones.

Many have heard the numbers tossed around regarding the billions needed to train newer models. This is true yet not what I am referring to.

Due to the processing, we are going to see the ability to train older models for a lot less money.

For example, many are using ChatGPT2 levels for their present models. This is something that can be trained for thousands of dollars. ChatGPT 4.0 is presently in the tens of millions.

What happens, in a couple years, when ChatGPT 3 level can be achieved for $10,000? Think of all the companies that can build their own system. Will it be the latest and greatest? No. It will, however, be sufficient for many use cases.

This also helps to democratize AI. Each year, the cost of compute comes down, allowing older models to be trained for less.

By 2028, the $10,000 figure might apply to GPT4 level (if it takes that long).

Exponential rates in compute need to be combined with similar rates in data. We covered this on a number of occasions.

The key is not the "under the hood" stuff. That is powering everything. What will change is the applications. This is where the ability to utilize this is emerging. We did not have a great deal of technological innovation at the societal level the last 20 years. Those days are gone for a while.

We will see all this horsepower put to use. AI is going to be integrated into everything. This will be done via AI agents, which are going to take over the Internet.

And now we can see what is driving it all.

Posted Using InLeo Alpha

The AI expansion is one thing movie prediction anticipated. It not a surprise as higher models exist, older models become cheaper it's the normal law of demand, smart phone proved that, although I believe it will later be rare gem in many years to come when advancement has gone very far as it will become vintage.

The expansion in GPU's is outstanding, I would will any company rival Nividia, or is the market there's to monopolize?.

This a very enlightening post. Thank you.