RE: LeoThread 2024-12-11 08:21

You are viewing a single comment's thread:

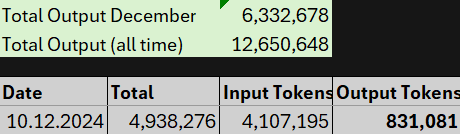

Summary Stats from December 10, 2024

Yesterday, the Youtube Summarizer processed a total of 4.1 million input tokens, producing approximately 831,000 output tokens that was posted to chain. That's equivalent to about 2300 book pages, and is just shy of our all time high daily record (860k)!

Keep up the excellent work and summarizing #summarystats

0

0

0.000

Well done @taskmaster4450le, @calebmarvel01, @falcon97 and @coyotelation 👏👏

Along with the others contributing

Yeah sorry if I missed anyone 🦁

Feel free to drop their names here, just wanna send encouragement

Awesome, let's keep the momentum going.

What is this all about my friend?

I'm glad you asked! You can read all about it in my last longform post: https://inleo.io/@mightpossibly/aisummaries-weekly-report-1-bgn?referral=mightpossibly

This means that those who do videos on Hive can easily get a summary transcript to attach to their videos upon posting.

That's right! And the data is added to the network as well. Win win 😁

2300 books this is a major boost in data

I have to improve, this fall must have been my poor performance.

Great work my friend!

When you refer to tokens, do you mean bot comments?

Haha december 10 was our second best day yet! No worries, we contribute when we can, everyone has other stuff to do as well :)

Tokens can be thought of as pieces of words. Here are some helpful rules of thumb for understanding tokens in terms of lengths:

Or

Source: help.openai.com

I got It.

Thanks for the explanation. These terms are new to me hahaha...

Hi, @coyotelation,

This post has been voted on by @darkcloaks because you are an active member of the Darkcloaks gaming community.

Get started with Darkcloaks today, and follow us on Inleo for the latest updates.

Hi, @mightpossibly,

This post has been voted on by @darkcloaks because you are an active member of the Darkcloaks gaming community.

Get started with Darkcloaks today, and follow us on Inleo for the latest updates.