Free Open Source Chatgpt Tutorial

Hello everyone, long time no posting, as usual, and as usual I have something important to say.

I have been poking around with LLM models for a while now, in particular with the open source ones, then obviously i have been talking with Gemini a LOT while writing code, but that's not the point, gemini is NOT open source, and a consequence of that, despite how easy it is tom use it in a very productive way, is that everything you do with it is ownership of google mum... and we are independent developers aren't we?

I mean, what if Elon Musk buys google and decides that Jilt is not nice enough to use it?

I'm not digging into politics, not even into Elon Musk, I just mentioned him to prove my point, AI has become that big in the last years, so big that we all have a responsibility to work towards it as a public good, and I use open source technologies from my university years because of that, I wouldn't even be so much into the blockchain if i didn't believe in the collective mind enough to invest on it.

Then I found myself digging into the mistral models, but given the rise of autonomous crypto incentivizing models in the virtuals ecosystem I really wanted my model to be able to execute crypto tasks like rewarding people based on social interactions, like all the virtuals AI agents already do.

And that is called Function Calling.

Now Mistral does all of that, and the model I chose, is a finetuned version of the last Mistral model, created by huggingface itself and converted into a gguf model.

Now a gguf model is a quantized AI model that allows me to run it on CPUs, not GPUs with almost the same results as the Mistral model itself with a 30 to 60 seconds lag (which is not that bad) for free.

Yes you read me well, I'm teaching you how to create your own chatGPT API to connect with all the apps that fancy devs teach you on youtube while asking you to buy the chatGPT membership... but for free.

And here we need to be grateful to Hugging Face and its mission for democratizing AI just as a first step, because gratitude will make us happier while we hustle with code, then we can begin.

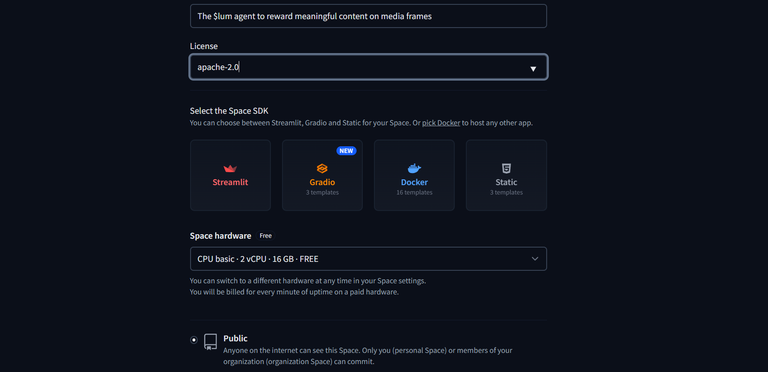

We are going to use a Hugging Face space to deploy our LLM, using CPU Basic, 2 vCPU with 16GB RAM, and 50GB Storage Free, so you need to create an Hugging Face user before we begin.

The portal works basically like github, but large files cannot be uploaded into spaces, and our LLM model is 4gb (it's small because it's quantized but it's still too large for huggingface) i'll tell you the solution after a little python lesson, just a minute.

We can run this gguf LLM on the CPU with the help of Ctransformers. CTransformer library is a Python package that provides access to Transformer models implemented in C/C++ using the GGML library.

GGML

GGML is a C library for machine learning. It helps run large language models (LLMs) on regular computer chips (CPUs). It uses a special way of representing data (binary format) to share these models. To make it work well on common hardware, GGML uses a technique called quantization. This technique comes in different levels, like 4-bit, 5-bit, and 8-bit quantization. Each level has its own balance between efficiency and performance.

Drawbacks

The main drawback here is latency. Since this model will run on CPUs, we won’t receive responses as quickly as a model deployed on a GPU , but the latency is not excessive. On average, it takes around 1 minute to generate 140–150 tokens on huggingface space. Actually, it performed quite well on local system with a 16-core CPU, providing responses is less than 15 sec.

Don’t get confused. GGUF is updated version of GGML, offering more flexibility, extensibility, and compatibility. It aims to simplify the user experience and accommodate various models. GGML, while a valuable early effort, had limitations that GGUF seeks to overcome.

Zephyr GGUF is the model we are using

Lets Begin

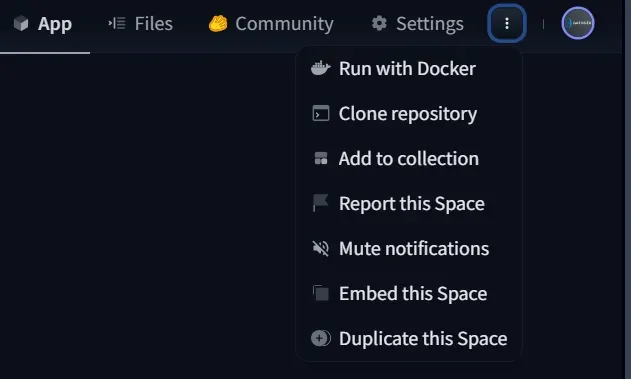

To avoid the large files problem I suggest to go to this link click on the triple points menu on the upper right (beside the "Community" button) and click "Duplicate Space", fast and easy.

I could link you my space, but we need some step to get to the autonomous agents, and i want you to understand them.

Now the "building" label should be visible on the maun menu bar of your space signaling that the space is installing the required python libraries, if you click the "files" button you should see a list like this:

├── Readme.md

├── Dockerfile

├── main.py

├── requirements.txt

└── zephyr-7b-beta.Q4_K_S.gguf

You canc hange the readme as you prefer, giving the right name, license and desccription to your space, after each commit the space will need at least 5 minutes to rebuild, so handle typos with care.

After the building process is completed succesfully you should be able to access the newly deployed API docs (auto generated) at the link:

https://user-spacename.hf.space/docs

For example the repo we copied the docs are in:

https://gathnex-llm-deployment-zerocost-api.hf.space/docs

and endpoint to query to get the LLM to respond to your prompts (formatted as explained in the docs) is:

https://gathnex-llm-deployment-zerocost-api.hf.space/llm_on_cpu

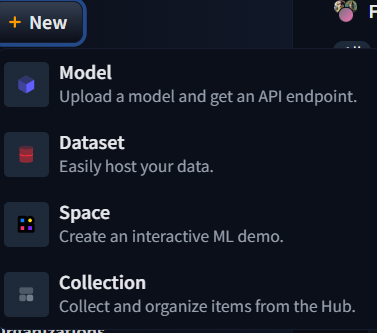

Now what we have done is actuallythe very same as clicking on "New" in the Hugging Face home page and selecting space

Then selecting the right kind of space (the CPU free one)

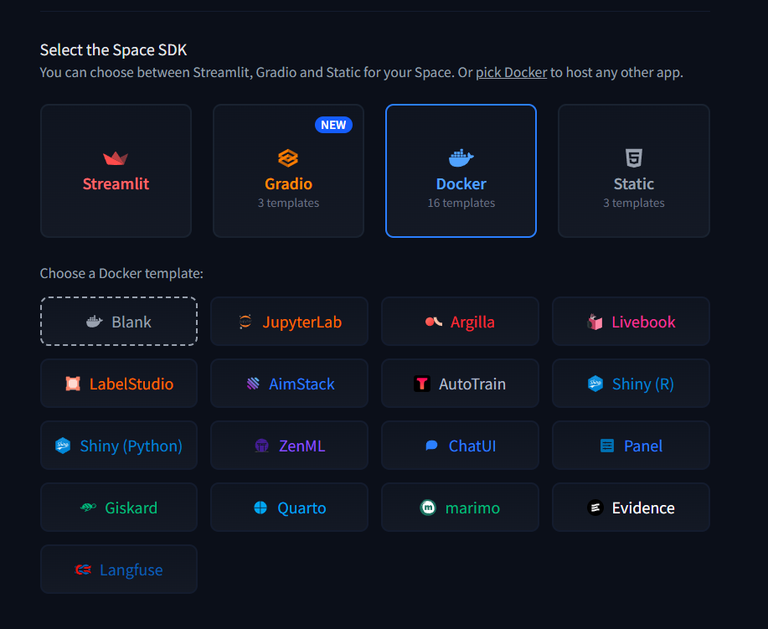

And the docker Blank configuration

But we duplicated the space remember?

So we skipped all that, now we can step towards function calling.

To implement a simple endpoint connected to a function that adds +1 to a numeric variable we can select the main.py file and click the "edit" button after it loads, the we change the code as this:

from ctransformers import AutoModelForCausalLM

from fastapi import FastAPI, Form

from pydantic import BaseModel

import re

# Model loading

llm = AutoModelForCausalLM.from_pretrained(

"zephyr-7b-beta.Q4_K_S.gguf",

model_type='mistral',

max_new_tokens=1096,

threads=3,

)

# Pydantic object for input validation

class Validation(BaseModel):

prompt: str

# FastAPI app

app = FastAPI()

# Global counter

counter = 0

# Modified increment function

def increment_and_print(value: int):

global counter

counter += value

print(f"Counter: {counter}")

return counter

# Zephyr completion (existing endpoint - unchanged)

@app.post("/llm_on_cpu")

async def stream(item: Validation):

system_prompt = 'Below is an instruction that describes a task. Write a response that appropriately completes the request.'

E_INST = "</s>"

user, assistant = "<|user|>", "<|assistant|>"

@app.post("/increment_counter")

async def increment():

result = increment_and_print(1)

return {"counter": result}

Now if you commit the code you'll find a new endpoint in the Docs that triggers the "increment_and_print" function to add 1 to our variable.

And this my friends is function calling.

But let's add an extra shall we?

Because we want our Agent to be a fully articulated crypto autonomous agent, we deeply despise the simple chatGPT API, we are evolved for decentralization.

So we're gonna add an endpoint that adds a custom numeric value to our variable, this way you can setup your transfer functions to be cutomizable as you need.

from ctransformers import AutoModelForCausalLM

from fastapi import FastAPI, Form

from pydantic import BaseModel

import re

# Model loading

llm = AutoModelForCausalLM.from_pretrained(

"zephyr-7b-beta.Q4_K_S.gguf",

model_type='mistral',

max_new_tokens=1096,

threads=3,

)

# Pydantic object for input validation

class Validation(BaseModel):

prompt: str

# FastAPI app

app = FastAPI()

# Global counter

counter = 0

# Modified increment function

def increment_and_print(value: int):

global counter

counter += value

print(f"Counter: {counter}")

return counter

# Zephyr completion (existing endpoint - unchanged)

@app.post("/llm_on_cpu")

async def stream(item: Validation):

system_prompt = 'Below is an instruction that describes a task. Write a response that appropriately completes the request.'

E_INST = "</s>"

user, assistant = "<|user|>", "<|assistant|>"

prompt = f"{system_prompt}{E_INST}\n{user}\n{item.prompt.strip()}{E_INST}\n{assistant}\n"

return llm(prompt)

# New endpoint for incrementing with prompt value

@app.post("/increment_from_prompt")

async def increment_from_prompt(item: Validation):

match = re.search(r'\d+', item.prompt)

if match:

increment_value = int(match.group())

result = increment_and_print(increment_value)

else:

result = increment_and_print(0)

return {"counter": result}

# New endpoint

@app.post("/increment_counter")

async def increment():

result = increment_and_print(1)

return {"counter": result}

et voilà.

Copy and paste the new main.py function and wait for the build to be complete, you'll get documentation generated for your endpoints to be used at the link above.

If you want to setup a wallet for the agent you'd better put the secret key in a secret variable as described here.

I'll try and keep up with your comments if you find any problem in setting up your open source chatGPT API!!!

Thank you so much for the tutorial! Many interesting blockchain uses for AI models have been coming into my awareness lately, and I'm very interested to explore them! I also really appreciate you working with open-source code. It's important anyway, but even more so with AI models. 😁 🙏 💚 ✨ 🤙

Thank you for the answer, I hope the article helps :)

Absolutely, you're most welcome! Indeed, I'm bookmarking it! 😁 🙏 💚 ✨ 🤙

I have received some requests by devs that look for people to test their Blockchain platforms using open source models to generate code, you have the idea and the model writes the code for you, just let me know if you're interested!

That's so interesting, and yes, I've seen some models doing quite well with coding. Grok3 is making a bit of a name for itself in that regard. Well, we have an excellent coder on our team (The We Are Alive Tribe and the MMB Project), but this idea fascinates me, so yes, I'm interested. I'm working on learning to code, to contribute more fully, but this sort of thing would be very helpful indeed. 😁 🙏 💚 ✨ 🤙

I think knowing how code works is most important when you talk to these models, so even if you use them keep learning!

That would be my hope if I were to make use of them, since they do so much of the heavy lifting, that I would keep learning. 😁 🙏 💚 ✨ 🤙

Great!

Thank you! I'm sorry for the late reply and vote, I'll be posting more soon, thank you for the follow!

Thank you!

Congratulations @jilt! You have completed the following achievement on the Hive blockchain And have been rewarded with New badge(s)

Your next target is to reach 54000 upvotes.

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPInteresting!

Thanks!