Everything You Share Online in Public Space Can be Training Data

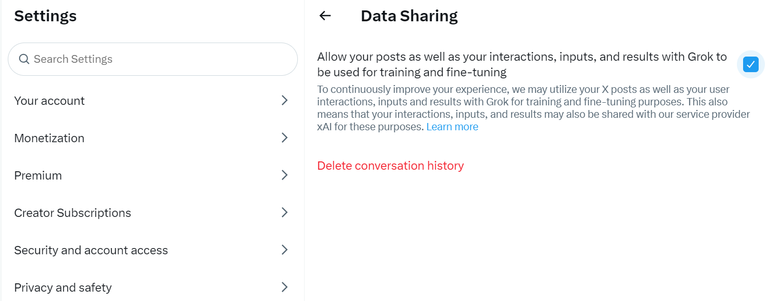

X uses your data to train their AI Grok and this setting is on by default. This guide has been making rounds on my X feed.

To opt out:

Settings -> Privacy and Safety -> See the word Grok and uncheck.

In online art sites like DeviantArt, you can have your posts tagged as No AI to signal scrapers not to use your content and this magically solves the problem of corporations or private entities to respect your wishes, lol no. It's just a notice. If you post art, you can run the work through Nightshade or Glaze to poison the training data.

I don't use these programs yet. But I'm doing my part by poisoning the AI with bad art and horrible drawings. You can thank me for generated images that have bad hands later.

Every major corporation out there wants a piece of the AI action and they'll incorporate it into their platform one way or another. Because sites like Facebook, X and etc., uses attention and inputs as their product, expect this will be the norm in any mainstream social site. Majority of the public that's unaware willingly give out their sensitive information during sign ups and just checking terms and conditions without reading.

You know the content you put out on Hive? those can be scraped to and putting a notice you don't consent does nothing. There's no escaping as long as you're publicly online. And I stopped giving it much thought after coming to the conclusion there's little I can do about it as an individual. It's not like the AI was made specifically tailored to show ads because there's a demographic out there that has the same like minded interests and online habits I do and it might even be good if it does show me products that I'd be interest in buying or be subtle about improving my user experience.

While I can opt out with my account, it's not like thousands of other users would and by the time they do, there's enough data integrated into the AI as we don't really know when the scraping started. We just know it's done when it's scheduled to go live in public.

https://x.com/MKBHD/status/1816487078265344313

The label publicly available is fancy word for scrapers to get data as long as it's in a public space without consent or regard for the rights to whoever owns the content. I know a lot of YouTubers didn't know about this and how their content is going to be monetized in a different form, it's as if youtubers couldn't catch a break from youtube's own monetization problems for creators.

There are artists that willingly put their works for the AI to learn but to be effective at rendering generated images, it needs billions of content to pump out what you'd want from a prompt. You'd have to create millions to billions of images then tag these individually for the AI to understand for training data. It's a gray area because as an artist you fully consent to giving your work to train AI but then this AI also uses other artist's work and more so from artists that are already dead.

Dead artists can't give consent and it's just tacky to credit your work as fully yours while instilling bit of Michael Angelo, Leonardo da Vinci and other nameless artists on the piece pumped out by the software. But you gave a piece of your work to the AI to learn from and that's contributing 0.0000001% of the content, now it's 100% your imagination and prompt because it's trained under your style. I know I exaggerated but I hope the thought sticks more.

AI being trained for dating conversations be like:

If this user starts with "Hey!" there are 401k viable opening chats to follow up, If they start with "hey..." the added periods narrows it down to 12k options to follow up.

Heya.

Thanks for your time.

It’s now looking like we have to thread carefully

They are stealing our informations and the things we racked our brains to write

It’s unfair

There are image stock sites in which users upload their videos and photographs for money (providing someone buys them to license somehow). Basically Shutterstock and Adobe Stock. One thing these platforms actually do right is pay the creator of that content (if they approve the process) if it ends up being licensed out for training data.

It goes to show how disgusting the practice of social media now is, not only requesting that you pay to use it for useless features like additional exposure, but also now taking that data and profiting from it more. You get nothing. It's insane. Everyone should be getting a fair share even if it's mere cents in ad revenue or training data used.

The controversy surrounding this was creators gave their consent to the platform but this was before the AI trend started. They were not getting anything even if they didn't sign up for their works to be used for training data.

I agree that there's an advantage for exposure for those that pay for more visibility. Sometimes it's just reach that makes a difference in success rather than skills. But these platforms run a business and nothing is free. We are the product the advertisers want to buy.

Data is the new oil. A guy I listened to a while ago said that many years ago and people thought he was stupid. Turns out he’s damn right.

I think it’s actually a really good idea to throw a wrench into things by intentionally fucking it the hands so that the AI models that end up using it will get messed up themselves. Fuck em!